The following papers were recommended by the Semantic Scholar API

\n- \n

- ProRL: Prolonged Reinforcement Learning Expands Reasoning Boundaries in Large Language Models (2025) \n

- Behavior Injection: Preparing Language Models for Reinforcement Learning (2025) \n

- KTAE: A Model-Free Algorithm to Key-Tokens Advantage Estimation in Mathematical Reasoning (2025) \n

- KDRL: Post-Training Reasoning LLMs via Unified Knowledge Distillation and Reinforcement Learning (2025) \n

- Do Not Let Low-Probability Tokens Over-Dominate in RL for LLMs (2025) \n

- Beyond Accuracy: Dissecting Mathematical Reasoning for LLMs Under Reinforcement Learning (2025) \n

- Incentivizing Strong Reasoning from Weak Supervision (2025) \n

Please give a thumbs up to this comment if you found it helpful!

\nIf you want recommendations for any Paper on Hugging Face checkout this Space

\n You can directly ask Librarian Bot for paper recommendations by tagging it in a comment: \n\n@librarian-bot\n\t recommend

If I am not mistaken your approach doesn't allow the massively parallel scaling from standard pre training, so you shouldn't be constrained to just next token prediction.

\nHave you considered other RL objectives inspired by pre-training besides next token prediction? Like masked token prediction and next sentence prediction from BERT.

\n","updatedAt":"2025-06-12T10:24:36.903Z","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9107126593589783},"editors":["TonyCWang"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png"],"reactions":[{"reaction":"👍","users":["cmcmaster","legendlc"],"count":2}],"isReport":false},"replies":[{"id":"6864f0d334d38ee9e52ea88c","author":{"_id":"670740744341dcee459fb990","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png","fullname":"Rosy24","name":"Rsy24","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":2,"isUserFollowing":false},"createdAt":"2025-07-02T08:41:55.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Good point! We focus on token-level prediction as the objective is more atomic and clear. Masked token prediction and next sentence prediction are quite interesting and worth exploring :)","html":"Good point! We focus on token-level prediction as the objective is more atomic and clear. Masked token prediction and next sentence prediction are quite interesting and worth exploring :)

\n","updatedAt":"2025-07-02T08:41:55.170Z","author":{"_id":"670740744341dcee459fb990","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png","fullname":"Rosy24","name":"Rsy24","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":2,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9441425800323486},"editors":["Rsy24"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png"],"reactions":[{"reaction":"❤️","users":["TonyCWang"],"count":1}],"isReport":false,"parentCommentId":"684aaae4fbde07556d8648cd"}}]},{"id":"684ac5cb188e3033154d4647","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-06-12T12:19:23.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Can you provide your fine-tuning code? I am interested in applying the same on the reasoning model MiMo-7B using the same proxy model for entropy but pre-processing the same dataset first then using PPO with binary rewards. Do you think this is achievable on a single H100? using vllm for generation and splitting the vllm/train by a ratio of 30%/70% with shorter sequence length as the MiMo model doesn't tend to be verbose a lot. Also, when using the dataset, are you combining the question with the answer and doing next token prediction on the whole text or just the answer? I have created a training code but really interested in seeing your implementation as this needs memory efficiency and speed. ","html":"Can you provide your fine-tuning code? I am interested in applying the same on the reasoning model MiMo-7B using the same proxy model for entropy but pre-processing the same dataset first then using PPO with binary rewards. Do you think this is achievable on a single H100? using vllm for generation and splitting the vllm/train by a ratio of 30%/70% with shorter sequence length as the MiMo model doesn't tend to be verbose a lot. Also, when using the dataset, are you combining the question with the answer and doing next token prediction on the whole text or just the answer? I have created a training code but really interested in seeing your implementation as this needs memory efficiency and speed.

\n","updatedAt":"2025-06-12T12:19:23.479Z","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9507192373275757},"editors":["ykarout"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png"],"reactions":[],"isReport":false}},{"id":"684d4075459dbf509ba88b64","author":{"_id":"63c507490c24c8b5395891dc","avatarUrl":"/avatars/f246c5bbd88c18cdde8db3a0bc023c0d.svg","fullname":"s","name":"leosong","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-06-14T09:27:17.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"is it even possible to do RPT-zero? purely RPT a model from scratch.","html":"is it even possible to do RPT-zero? purely RPT a model from scratch.

\n","updatedAt":"2025-06-14T09:27:17.919Z","author":{"_id":"63c507490c24c8b5395891dc","avatarUrl":"/avatars/f246c5bbd88c18cdde8db3a0bc023c0d.svg","fullname":"s","name":"leosong","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9007357358932495},"editors":["leosong"],"editorAvatarUrls":["/avatars/f246c5bbd88c18cdde8db3a0bc023c0d.svg"],"reactions":[{"reaction":"❤️","users":["Rsy24"],"count":1}],"isReport":false},"replies":[{"id":"685dbf76e045f7a599ffbd36","author":{"_id":"6740b6b6584b3f5cdbaa7509","avatarUrl":"/avatars/7b1f9b103690085caf34052ef4b9da62.svg","fullname":"ZS","name":"zhengshencn","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":1,"isUserFollowing":false},"createdAt":"2025-06-26T21:45:26.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Then where the initial CoT capability comes from?","html":"Then where the initial CoT capability comes from?

\n","updatedAt":"2025-06-26T21:45:26.956Z","author":{"_id":"6740b6b6584b3f5cdbaa7509","avatarUrl":"/avatars/7b1f9b103690085caf34052ef4b9da62.svg","fullname":"ZS","name":"zhengshencn","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":1,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.8027227520942688},"editors":["zhengshencn"],"editorAvatarUrls":["/avatars/7b1f9b103690085caf34052ef4b9da62.svg"],"reactions":[],"isReport":false,"parentCommentId":"684d4075459dbf509ba88b64"}},{"id":"686826235d6d51931f035eb6","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-04T19:06:11.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"I think it’s doable. CoT/Reasoning is basically one of the results out of the original GRPO implementation. \nThere must exist a method to achieve both goals of injecting CoT/Reasoning and RPT since both are basically based on the same foundation. \nIt’s already mentioned in the paper and author comments that this is actually a work-in-progress. ","html":"I think it’s doable. CoT/Reasoning is basically one of the results out of the original GRPO implementation.

There must exist a method to achieve both goals of injecting CoT/Reasoning and RPT since both are basically based on the same foundation.

It’s already mentioned in the paper and author comments that this is actually a work-in-progress.

Just curious about if you are planning to publish RPT-14B or some equivalent model weights 🙂.

\n","updatedAt":"2025-06-15T19:12:34.397Z","author":{"_id":"631a10600867652f5384b680","avatarUrl":"/avatars/c0c46f2fdb2f3b0048ad4dad077e0efd.svg","fullname":"Toni Martí","name":"tmarti","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9065071940422058},"editors":["tmarti"],"editorAvatarUrls":["/avatars/c0c46f2fdb2f3b0048ad4dad077e0efd.svg"],"reactions":[],"isReport":false}},{"id":"685a8e06f3a1e24ceb7e602a","author":{"_id":"6353dc3e3bc1819d22d41e7f","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/1666440274544-6353dc3e3bc1819d22d41e7f.jpeg","fullname":"Tongke Nee","name":"TankNee","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-06-24T11:37:42.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"\n\n\nIs there any difference between Standard next-token prediction and Next-token reasoning when reasoning? Does the RPT trained model also need to think about each token when reasoning? Or does it only think about some difficult tokens? But how do we know which tokens are difficult when reasoning? And if we follow this approach, will it greatly increase the reasoning time? I hope you can put a complete reasoning example so that I can understand the doubts here. Thank you very much for your work.","html":"\nIs there any difference between Standard next-token prediction and Next-token reasoning when reasoning? Does the RPT trained model also need to think about each token when reasoning? Or does it only think about some difficult tokens? But how do we know which tokens are difficult when reasoning? And if we follow this approach, will it greatly increase the reasoning time? I hope you can put a complete reasoning example so that I can understand the doubts here. Thank you very much for your work.

\n","updatedAt":"2025-06-24T11:37:42.160Z","author":{"_id":"6353dc3e3bc1819d22d41e7f","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/1666440274544-6353dc3e3bc1819d22d41e7f.jpeg","fullname":"Tongke Nee","name":"TankNee","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9181823134422302},"editors":["TankNee"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/1666440274544-6353dc3e3bc1819d22d41e7f.jpeg"],"reactions":[{"reaction":"👍","users":["egshes","ykarout"],"count":2}],"isReport":false},"replies":[{"id":"6864d60765f0345eed5411ed","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-07-02T06:47:35.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"From what I understand, next token reasoning is always using chain of thought / scratchpad / thoughts to output intermediate tokens before the final prediction is outputted in a special format (\\boxed{})","html":"From what I understand, next token reasoning is always using chain of thought / scratchpad / thoughts to output intermediate tokens before the final prediction is outputted in a special format (\\boxed{})

\n","updatedAt":"2025-07-02T06:47:35.784Z","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.8418422341346741},"editors":["TonyCWang"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png"],"reactions":[],"isReport":false,"parentCommentId":"685a8e06f3a1e24ceb7e602a"}},{"id":"6868059ac60b9153c3b45c5c","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-04T16:47:22.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"This touches on a key distinction that many people miss about RPT.\nTraining vs. Inference: The Key Difference\nDuring Training (RPT):\n\nThe model explicitly generates reasoning chains (This touches on a key distinction that many people miss about RPT.

Training vs. Inference: The Key Difference

During Training (RPT):

The model explicitly generates reasoning chains (...) before predicting each next token

It gets rewarded only when the final prediction matches the ground truth

This \"thinking\" process is forced and visible

During Inference (After Training):

\nThe model behaves like a standard LLM - no explicit thinking steps

The reasoning capabilities have been \"baked into\" the model weights

It directly generates text without visible intermediate reasoning

Token Difficulty During Training

The paper uses entropy-based filtering to identify difficult tokens:

They use a smaller proxy model (DeepSeek-R1-Distill-Qwen-1.5B) to calculate entropy on top-16 next tokens

Tokens with high entropy (> threshold) are considered \"difficult\" and prioritized for training

Easy tokens (low entropy) are filtered out to focus compute on challenging predictions

Complete Example

Let me show you what this looks like:

During RPT Training:

Input context: \"Electric force grows with charge\"

Expected next token: \" size\"

Model generates:

To determine the next token, I need to think about physics concepts.

Electric force grows with charge... this sounds like Coulomb's law.

The complete statement would be \"Electric force grows with charge size and decreases with distance squared.\"

So the next token should be \" size\".

Alternative possibilities: \" magnitude\", \" amount\"

But \"size\" is most common in physics textbooks.

size

Reward: 1 (correct!) ✅

During Inference (After Training):

Input: \"Explain Coulomb's law\"

Model output (direct generation):

\"Coulomb's law states that the electric force between two charged objects is directly proportional to the product of their charges and inversely proportional to the square of the distance between them. The formula is F = k(q₁q₂)/r²...\"

No Increased Reasoning Time

Important: The trained model doesn't slow down during inference because:

No explicit thinking steps are generated

The reasoning patterns learned during training are now encoded in the model weights

It generates text at normal speed, just with better quality

Why This Works

Think of it like learning to drive:

Training phase: You consciously think through every action (\"check mirrors, signal, check blind spot...\")

After training: These actions become automatic - you drive smoothly without conscious step-by-step thinking

Similarly, RPT training teaches the model to internalize reasoning patterns, which then manifest as better predictions without explicit reasoning steps during inference.

Key Insight from the Paper

The paper shows that RPT-14B matches the performance of much larger models (R1-Distill-Qwen-32B) while maintaining the same inference speed. This confirms that the reasoning capabilities are \"distilled\" into the weights rather than requiring additional computation at inference time.

Bottom line: RPT creates smarter models that reason better internally, not slower models that think explicitly during inference.

Sharing a video & written explanation of this paper - https://aipapersacademy.com/reinforcement-pre-training/

\n","updatedAt":"2025-07-01T16:33:10.102Z","author":{"_id":"665edfcf2b842ec980842bd4","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/665edfcf2b842ec980842bd4/GJHNPJ3ULIMEMq6VGxZaI.png","fullname":"AI Papers Academy","name":"aipapersacademy","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":3,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.8889329433441162},"editors":["aipapersacademy"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/665edfcf2b842ec980842bd4/GJHNPJ3ULIMEMq6VGxZaI.png"],"reactions":[{"reaction":"❤️","users":["naman5a"],"count":1}],"isReport":false}},{"id":"68644e49628782db4881234a","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-07-01T21:08:25.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Excellent paper guys! My question is related to whether is RPT from scratch? So the base model's weights are used and then updated (so a re- pre - training process). I am really hoping to get a reply for this query","html":"Excellent paper guys! My question is related to whether is RPT from scratch? So the base model's weights are used and then updated (so a re- pre - training process). I am really hoping to get a reply for this query

\n","updatedAt":"2025-07-01T21:08:25.622Z","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.947167158126831},"editors":["nzain"],"editorAvatarUrls":["/avatars/7eeca4dff56a82e13385b8365aa5c338.svg"],"reactions":[{"reaction":"👍","users":["ykarout"],"count":1}],"isReport":false},"replies":[{"id":"6864d59d66f3cd7282a55678","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-07-02T06:45:49.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"No it's not. They start from a distilled modell","html":"No it's not. They start from a distilled modell

\n","updatedAt":"2025-07-02T06:45:49.011Z","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9740862250328064},"editors":["TonyCWang"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png"],"reactions":[{"reaction":"👍","users":["ykarout"],"count":1}],"isReport":false,"parentCommentId":"68644e49628782db4881234a"}}]},{"id":"68672e5a83cd8d60ad31806a","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-04T01:28:58.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"I applied RPT to DeepSeek-R1-0528-Qwen3-8B using a small dataset of around 500 samples only through TRL GRPO implementation and vllm for generation. \nRunning the MMLU-Pro benchmark on both the base model and the fine-tuned model the overall result increased from 38.5% to 44.5% (Ran it through lm-eval with max generated tokens = 2048 so some samples got truncated therefore probably with more max tokens the values will increase). \nCheckout the model at ykarout/RPT-DeepSeek-R1-0528-Qwen3-8B \n","html":"I applied RPT to DeepSeek-R1-0528-Qwen3-8B using a small dataset of around 500 samples only through TRL GRPO implementation and vllm for generation.

Running the MMLU-Pro benchmark on both the base model and the fine-tuned model the overall result increased from 38.5% to 44.5% (Ran it through lm-eval with max generated tokens = 2048 so some samples got truncated therefore probably with more max tokens the values will increase).

Checkout the model at ykarout/RPT-DeepSeek-R1-0528-Qwen3-8B

hey man, I want to ask if you used GRPO for pre-training or for fine-tuning?

\n","updatedAt":"2025-07-04T13:32:22.887Z","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9090611338615417},"editors":["nzain"],"editorAvatarUrls":["/avatars/7eeca4dff56a82e13385b8365aa5c338.svg"],"reactions":[],"isReport":false,"parentCommentId":"68672e5a83cd8d60ad31806a"}},{"id":"68680399b81d378bdb7681f0","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-04T16:38:49.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Hey man. Great question! This is actually a common point of confusion due to the paper's terminology.\nThe short answer: I used GRPO for fine-tuning, not pre-training from scratch.\nWhy the confusion exists:\nThe paper calls it \"Reinforcement Pre-Training\" (RPT), but this name is misleading. It's not actually pre-training a model from scratch. Instead, you need to start with a model that already has reasoning capabilities (at least for now as they mention)\n\n\nWhat RPT actually does:\n\nTakes any existing text corpus and turns it into next-token prediction tasks\nInstead of just predicting the next token directly, the model must \"think through\" and reason about what comes next\nThe model gets rewarded (score of 1) if it predicts correctly, 0 if wrong\nThis transforms any raw text into a dataset suitable for reinforcement learning as you can directly extract the verifiable reward which is the real next token in the text - so no heavy efforts in creating datasets such as math problems and correct answers to verify in GRPO.\n\nWhy it's called \"pre-training\":\nThe authors chose this name because you can use any corpus (books, papers, datasets) without needing manually annotated rewards - similar to how traditional pre-training uses vast amounts of raw text. That is the analogy (both can use any raw text from any source - which as you know can be trillions of tokens)\nBut it's really reinforcement fine-tuning on next-token reasoning tasks.\nMy implementation:\n\nStarted with: DeepSeek-R1-0528-Qwen3-8B (already a reasoning model)\nTraining method: Full fine-tuning using GRPO (No Peft or LoRA)\nDataset: 500 diverse samples with unique next tokens that are not easy to predict to push the model to reason well and improve.\nTraining time: ~5-6 hours on single H200\nKey difference: Used Dr. GRPO without reward scaling\n\n\nThe results validate that this \"next-token reasoning\" approach significantly improves the model's prediction accuracy. Some have suggested it should be called \"Next-Token Reasoning\" instead of \"pre-training\" to avoid confusion.\nHappy to share the training code and dataset if you're interested! 🚀","html":"Hey man. Great question! This is actually a common point of confusion due to the paper's terminology.

The short answer: I used GRPO for fine-tuning, not pre-training from scratch.

Why the confusion exists:

The paper calls it \"Reinforcement Pre-Training\" (RPT), but this name is misleading. It's not actually pre-training a model from scratch. Instead, you need to start with a model that already has reasoning capabilities (at least for now as they mention)

What RPT actually does:

\nTakes any existing text corpus and turns it into next-token prediction tasks

Instead of just predicting the next token directly, the model must \"think through\" and reason about what comes next

The model gets rewarded (score of 1) if it predicts correctly, 0 if wrong

This transforms any raw text into a dataset suitable for reinforcement learning as you can directly extract the verifiable reward which is the real next token in the text - so no heavy efforts in creating datasets such as math problems and correct answers to verify in GRPO.

Why it's called \"pre-training\":

The authors chose this name because you can use any corpus (books, papers, datasets) without needing manually annotated rewards - similar to how traditional pre-training uses vast amounts of raw text. That is the analogy (both can use any raw text from any source - which as you know can be trillions of tokens)

But it's really reinforcement fine-tuning on next-token reasoning tasks.

My implementation:

Started with: DeepSeek-R1-0528-Qwen3-8B (already a reasoning model)

Training method: Full fine-tuning using GRPO (No Peft or LoRA)

Dataset: 500 diverse samples with unique next tokens that are not easy to predict to push the model to reason well and improve.

Training time: ~5-6 hours on single H200

Key difference: Used Dr. GRPO without reward scaling

The results validate that this \"next-token reasoning\" approach significantly improves the model's prediction accuracy. Some have suggested it should be called \"Next-Token Reasoning\" instead of \"pre-training\" to avoid confusion.

Happy to share the training code and dataset if you're interested! 🚀

thank you for the detailed explanation man! okay I understand that its not pre-training from scratch and is more like fine-tuning. But in the paper they do RPT and then they show RPT models performance on further fine-tuning tasks... so I am still a bit confused which paradigm does it truly fall under and whether its possible to use RPT truly for pre-training

\n","updatedAt":"2025-07-07T12:46:38.013Z","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9534516334533691},"editors":["nzain"],"editorAvatarUrls":["/avatars/7eeca4dff56a82e13385b8365aa5c338.svg"],"reactions":[],"isReport":false,"parentCommentId":"68672e5a83cd8d60ad31806a"}},{"id":"686bc7bc93f7dcc4fda0ce97","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-07T13:12:28.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"yes indeed. this is completely after the first round of the RPT. \nround 1: Model —> RPT training —> RPT Model ✅ \nthe RPT model here is the one that shows the increased performance on the benchmarks. \nround 2: RPT Model —> further training (e.g SFT) —> RPT-SFT model ✅ \n\nthis experiment is to prove another point that is: When RPT model is further trained with e.g SFT, it will also surpass the performance of the non RPT model undergoing RL then SFT —> implying that RPT also enhances the output of further training —> conclusion: you not only get the advantage of first round of RPT but another advantage of more performance if you decide to do further training. ","html":"yes indeed. this is completely after the first round of the RPT.

round 1: Model —> RPT training —> RPT Model ✅

the RPT model here is the one that shows the increased performance on the benchmarks.

round 2: RPT Model —> further training (e.g SFT) —> RPT-SFT model ✅

this experiment is to prove another point that is: When RPT model is further trained with e.g SFT, it will also surpass the performance of the non RPT model undergoing RL then SFT —> implying that RPT also enhances the output of further training —> conclusion: you not only get the advantage of first round of RPT but another advantage of more performance if you decide to do further training.

\n","updatedAt":"2025-07-07T13:12:28.392Z","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9375205636024475},"editors":["ykarout"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png"],"reactions":[{"reaction":"🔥","users":["nzain"],"count":1}],"isReport":false,"parentCommentId":"68672e5a83cd8d60ad31806a"}},{"id":"6874ba40e705a6646d4b22a4","author":{"_id":"66d8512c54209e9101811e8e","avatarUrl":"/avatars/62dfd8e6261108f2508efe678d5a2a57.svg","fullname":"M Saad Salman","name":"MSS444","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":1,"isUserFollowing":false},"createdAt":"2025-07-14T08:05:20.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"> Hey man. Great question! This is actually a common point of confusion due to the paper's terminology.\n> The short answer: I used GRPO for fine-tuning, not pre-training from scratch.\n> Why the confusion exists:\n> The paper calls it \"Reinforcement Pre-Training\" (RPT), but this name is misleading. It's not actually pre-training a model from scratch. Instead, you need to start with a model that already has reasoning capabilities (at least for now as they mention)\n> \n> \n> What RPT actually does:\n> \n> Takes any existing text corpus and turns it into next-token prediction tasks\n> Instead of just predicting the next token directly, the model must \"think through\" and reason about what comes next\n> The model gets rewarded (score of 1) if it predicts correctly, 0 if wrong\n> This transforms any raw text into a dataset suitable for reinforcement learning as you can directly extract the verifiable reward which is the real next token in the text - so no heavy efforts in creating datasets such as math problems and correct answers to verify in GRPO.\n> \n> Why it's called \"pre-training\":\n> The authors chose this name because you can use any corpus (books, papers, datasets) without needing manually annotated rewards - similar to how traditional pre-training uses vast amounts of raw text. That is the analogy (both can use any raw text from any source - which as you know can be trillions of tokens)\n> But it's really reinforcement fine-tuning on next-token reasoning tasks.\n> My implementation:\n> \n> Started with: DeepSeek-R1-0528-Qwen3-8B (already a reasoning model)\n> Training method: Full fine-tuning using GRPO (No Peft or LoRA)\n> Dataset: 500 diverse samples with unique next tokens that are not easy to predict to push the model to reason well and improve.\n> Training time: ~5-6 hours on single H200\n> Key difference: Used Dr. GRPO without reward scaling\n> \n> \n> The results validate that this \"next-token reasoning\" approach significantly improves the model's prediction accuracy. Some have suggested it should be called \"Next-Token Reasoning\" instead of \"pre-training\" to avoid confusion.\n> Happy to share the training code and dataset if you're interested! 🚀\n\nplease share the training code, hyped to try this myself cheers.","html":"\n\nHey man. Great question! This is actually a common point of confusion due to the paper's terminology.

\n

The short answer: I used GRPO for fine-tuning, not pre-training from scratch.

Why the confusion exists:

The paper calls it \"Reinforcement Pre-Training\" (RPT), but this name is misleading. It's not actually pre-training a model from scratch. Instead, you need to start with a model that already has reasoning capabilities (at least for now as they mention)What RPT actually does:

\nTakes any existing text corpus and turns it into next-token prediction tasks

\n

Instead of just predicting the next token directly, the model must \"think through\" and reason about what comes next

The model gets rewarded (score of 1) if it predicts correctly, 0 if wrong

This transforms any raw text into a dataset suitable for reinforcement learning as you can directly extract the verifiable reward which is the real next token in the text - so no heavy efforts in creating datasets such as math problems and correct answers to verify in GRPO.Why it's called \"pre-training\":

\n

The authors chose this name because you can use any corpus (books, papers, datasets) without needing manually annotated rewards - similar to how traditional pre-training uses vast amounts of raw text. That is the analogy (both can use any raw text from any source - which as you know can be trillions of tokens)

But it's really reinforcement fine-tuning on next-token reasoning tasks.

My implementation:Started with: DeepSeek-R1-0528-Qwen3-8B (already a reasoning model)

\n

Training method: Full fine-tuning using GRPO (No Peft or LoRA)

Dataset: 500 diverse samples with unique next tokens that are not easy to predict to push the model to reason well and improve.

Training time: ~5-6 hours on single H200

Key difference: Used Dr. GRPO without reward scalingThe results validate that this \"next-token reasoning\" approach significantly improves the model's prediction accuracy. Some have suggested it should be called \"Next-Token Reasoning\" instead of \"pre-training\" to avoid confusion.

\n

Happy to share the training code and dataset if you're interested! 🚀

please share the training code, hyped to try this myself cheers.

\n","updatedAt":"2025-07-14T08:05:20.405Z","author":{"_id":"66d8512c54209e9101811e8e","avatarUrl":"/avatars/62dfd8e6261108f2508efe678d5a2a57.svg","fullname":"M Saad Salman","name":"MSS444","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":1,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9135468006134033},"editors":["MSS444"],"editorAvatarUrls":["/avatars/62dfd8e6261108f2508efe678d5a2a57.svg"],"reactions":[],"isReport":false,"parentCommentId":"68672e5a83cd8d60ad31806a"}},{"id":"687507381ba71c7aeedb10de","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-07-14T13:33:44.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"> yes indeed. this is completely after the first round of the RPT. \n> round 1: Model —> RPT training —> RPT Model ✅ \n> the RPT model here is the one that shows the increased performance on the benchmarks. \n> round 2: RPT Model —> further training (e.g SFT) —> RPT-SFT model ✅ \n> \n> this experiment is to prove another point that is: When RPT model is further trained with e.g SFT, it will also surpass the performance of the non RPT model undergoing RL then SFT —> implying that RPT also enhances the output of further training —> conclusion: you not only get the advantage of first round of RPT but another advantage of more performance if you decide to do further training.\n\nHey, thanks for the detailed reply, could you please share your code? ","html":"\n\nyes indeed. this is completely after the first round of the RPT.

\n

round 1: Model —> RPT training —> RPT Model ✅

the RPT model here is the one that shows the increased performance on the benchmarks.

round 2: RPT Model —> further training (e.g SFT) —> RPT-SFT model ✅this experiment is to prove another point that is: When RPT model is further trained with e.g SFT, it will also surpass the performance of the non RPT model undergoing RL then SFT —> implying that RPT also enhances the output of further training —> conclusion: you not only get the advantage of first round of RPT but another advantage of more performance if you decide to do further training.

\n

Hey, thanks for the detailed reply, could you please share your code?

\n","updatedAt":"2025-07-14T13:33:44.401Z","author":{"_id":"6824a2def08a44ee1f1df25f","avatarUrl":"/avatars/7eeca4dff56a82e13385b8365aa5c338.svg","fullname":"Noor Ul Zain","name":"nzain","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9374045133590698},"editors":["nzain"],"editorAvatarUrls":["/avatars/7eeca4dff56a82e13385b8365aa5c338.svg"],"reactions":[],"isReport":false,"parentCommentId":"68672e5a83cd8d60ad31806a"}}]},{"id":"686a690bb55fd233413f7d29","author":{"_id":"666afe2de9c4f482497eaa5f","avatarUrl":"/avatars/374a3f395fa986c11368a82aa6ae15ba.svg","fullname":"beiqing","name":"zhangBeiQing","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-07-06T12:16:11.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"That's a great idea, but the required computing power is exaggerated, estimated to be 100 to 1000 times that of traditional pre-training. Moreover, there are too few experiments conducted (possibly due to the excessively high computing requirements), making it somewhat like a semi-finished product that needs further optimization.","html":"That's a great idea, but the required computing power is exaggerated, estimated to be 100 to 1000 times that of traditional pre-training. Moreover, there are too few experiments conducted (possibly due to the excessively high computing requirements), making it somewhat like a semi-finished product that needs further optimization.

\n","updatedAt":"2025-07-06T12:16:11.395Z","author":{"_id":"666afe2de9c4f482497eaa5f","avatarUrl":"/avatars/374a3f395fa986c11368a82aa6ae15ba.svg","fullname":"beiqing","name":"zhangBeiQing","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9712229371070862},"editors":["zhangBeiQing"],"editorAvatarUrls":["/avatars/374a3f395fa986c11368a82aa6ae15ba.svg"],"reactions":[],"isReport":false},"replies":[{"id":"686a7a2e00fa3c0940e7560d","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-07-06T13:29:18.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Not at all. I’ve ran a replica of the training on the same model but the 8B version with parameters almost exactly as outlined in the paper on a small 500-sample dataset and a generous 8k context generation tokens and it finished in around 3.5 - 4 hours using a single H200 with 140 GB VRAM. Although the dataset size is considered on the small scale, I’ve seen around +10% improvement on MMLU_Pro benchmark overall score. \nI am confident this is going to grab even more attention with time as more people try and validate the results. ","html":"Not at all. I’ve ran a replica of the training on the same model but the 8B version with parameters almost exactly as outlined in the paper on a small 500-sample dataset and a generous 8k context generation tokens and it finished in around 3.5 - 4 hours using a single H200 with 140 GB VRAM. Although the dataset size is considered on the small scale, I’ve seen around +10% improvement on MMLU_Pro benchmark overall score.

I am confident this is going to grab even more attention with time as more people try and validate the results.

Thanks for getting back. I’ve been tied up with memory research lately, so didn’t see your reply. I’ll test it tomorrow.

\n","updatedAt":"2025-10-09T13:35:13.360Z","author":{"_id":"666afe2de9c4f482497eaa5f","avatarUrl":"/avatars/374a3f395fa986c11368a82aa6ae15ba.svg","fullname":"beiqing","name":"zhangBeiQing","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9507642388343811},"editors":["zhangBeiQing"],"editorAvatarUrls":["/avatars/374a3f395fa986c11368a82aa6ae15ba.svg"],"reactions":[],"isReport":false,"parentCommentId":"686a690bb55fd233413f7d29"}}]},{"id":"6874e7dfd5a95a3dd359bf89","author":{"_id":"66d8512c54209e9101811e8e","avatarUrl":"/avatars/62dfd8e6261108f2508efe678d5a2a57.svg","fullname":"M Saad Salman","name":"MSS444","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":1,"isUserFollowing":false},"createdAt":"2025-07-14T11:19:59.000Z","type":"comment","data":{"edited":true,"hidden":false,"latest":{"raw":"for this part (filtering the hard tokens) in the paper what was the context length i.e., how many tokens visible to make the next prediction and what tokenizer did you guys used same as the model or just whitespace.\n\n\"We use the OmniMATH dataset [GSY+24] for reinforcement pre-training. OmniMATH contains\n4,428 competition-level mathematical problems and solutions from official websites such as AoPS\nWiki3 and AoPS forum4. Since many tokens are easily predictable even without reasoning, we\nperform token-level data filtering before reinforcement pre-training. Particularly, we use DeepseekR1-Distill-Qwen-1.5B as a small proxy model. For each token, we calculate the proxy model entropy on the top-16 next tokens. By applying an entropy threshold, we filter out low-entropy positions, prioritizing training on challenging tokens that require greater computational effort to predict.\"","html":"for this part (filtering the hard tokens) in the paper what was the context length i.e., how many tokens visible to make the next prediction and what tokenizer did you guys used same as the model or just whitespace.

\n\"We use the OmniMATH dataset [GSY+24] for reinforcement pre-training. OmniMATH contains

4,428 competition-level mathematical problems and solutions from official websites such as AoPS

Wiki3 and AoPS forum4. Since many tokens are easily predictable even without reasoning, we

perform token-level data filtering before reinforcement pre-training. Particularly, we use DeepseekR1-Distill-Qwen-1.5B as a small proxy model. For each token, we calculate the proxy model entropy on the top-16 next tokens. By applying an entropy threshold, we filter out low-entropy positions, prioritizing training on challenging tokens that require greater computational effort to predict.\"

Can it be used as training domain knowledge that initial model didn't know??

\n","updatedAt":"2025-08-04T08:35:52.744Z","author":{"_id":"66051fe4b85b7b4ea5dc3d70","avatarUrl":"/avatars/931e3c443aca9216b33972469c30478d.svg","fullname":"Lee Jun Young","name":"juneodie","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9869078993797302},"editors":["juneodie"],"editorAvatarUrls":["/avatars/931e3c443aca9216b33972469c30478d.svg"],"reactions":[{"reaction":"👀","users":["juneodie"],"count":1}],"isReport":false},"replies":[{"id":"6891c2035f21762032fb592b","author":{"_id":"670740744341dcee459fb990","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png","fullname":"Rosy24","name":"Rsy24","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":2,"isUserFollowing":false},"createdAt":"2025-08-05T08:34:11.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Yes, through RPT-training on a domain corpus, the model is rewarded for correct next-token predictions. This process make the model learn domain specific knowledge and explore the domain-specific reasoning patterns (behind the word correlations).","html":"Yes, through RPT-training on a domain corpus, the model is rewarded for correct next-token predictions. This process make the model learn domain specific knowledge and explore the domain-specific reasoning patterns (behind the word correlations).

\n","updatedAt":"2025-08-05T08:34:11.110Z","author":{"_id":"670740744341dcee459fb990","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png","fullname":"Rosy24","name":"Rsy24","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":2,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.8509907126426697},"editors":["Rsy24"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/66UkZvrAk7fQr5YCylEFk.png"],"reactions":[{"reaction":"👍","users":["naman5a"],"count":1}],"isReport":false,"parentCommentId":"689070e813b91114a831ee73"}}]},{"id":"68a2e836c31958929d527b3b","author":{"_id":"63899e84f7d3b0df0922a280","avatarUrl":"/avatars/9c90747c5ef6e32e8428b2c7a8493803.svg","fullname":"ron","name":"ronlei","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false},"createdAt":"2025-08-18T08:45:42.000Z","type":"comment","data":{"edited":true,"hidden":false,"latest":{"raw":"Hello, it's a great article, and I have a few questions to ask:\n1. How many times longer does it take to train a piece of data(about 2048 length) using the NTR method compared to NTP? Should NTR be applied only to the last few tokens of a piece of data, or to all the tokens?\n2. How should we handle the situation if none of the 8 rollout data is the correct answer? Won't the model fail to learn in this case?","html":"Hello, it's a great article, and I have a few questions to ask:

\n- \n

- How many times longer does it take to train a piece of data(about 2048 length) using the NTR method compared to NTP? Should NTR be applied only to the last few tokens of a piece of data, or to all the tokens? \n

- How should we handle the situation if none of the 8 rollout data is the correct answer? Won't the model fail to learn in this case? \n

A very similar paper got published just yesterday. The idea is hot, results are great 🔥. They did not started from a base model and then did minimal instruction tuning before using RL. Instead of NPT they did next segment reasoning which allows for some variations, but requires a reward model.

\nhttps://arxiv.org/abs/2509.19249

\n","updatedAt":"2025-09-24T09:21:12.291Z","author":{"_id":"683b37810ed4c44229c912cf","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png","fullname":"Tony Congqian Wang","name":"TonyCWang","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"isUserFollowing":false}},"numEdits":0,"identifiedLanguage":{"language":"en","probability":0.9694623947143555},"editors":["TonyCWang"],"editorAvatarUrls":["https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/jRPxq3Z2Xag0lMxcE8By9.png"],"reactions":[{"reaction":"🔥","users":["ykarout"],"count":1}],"isReport":false},"replies":[{"id":"68d3bb5e41ee833e96295e3b","author":{"_id":"67d1eaf9f6cebd06730d5e15","avatarUrl":"https://cdn-avatars.huggingface.co/v1/production/uploads/no-auth/bNY_VjQVfth7wJ7VE7skG.png","fullname":"yehya","name":"ykarout","type":"user","isPro":false,"isHf":false,"isHfAdmin":false,"isMod":false,"followerCount":16,"isUserFollowing":false},"createdAt":"2025-09-24T09:35:26.000Z","type":"comment","data":{"edited":false,"hidden":false,"latest":{"raw":"Wow man, I was expecting an upgrade but not this fast. Thanks for sharing I took a quick look, RLTP now, the naming keeps keep getting, good start lol. \nactually, I remember on the members commented on this thread here exactly that, reasoning for next “segments” if we want to use the papers terminology. I think it’s a valid and harder quest which definitely can make results better. Only quick thoughts that come to mind, for Next Token, you can simply do a code-based verification method, its really boolean, yes or no. Longer segments can be tricky as boolean can’t apply, which leads us to the judge model you referred to. So two new dependencies, first how accurate and policy-compliant the judge model should be at minimum and how do you ensure that?; second, the extra compute required (you can opt to for example request more than 1 score to be generated by judge model for more accuracy to offset the variations of a segment vs a single token) for every single batch of predictions, which by themselves (predictions) will also takes longer to generate by the model under fine-tuning ( 1 token vs segment). Super interesting g man, this will be my next lab for the coming probably-long days/nights! Again thanks for sharing… are you taking a deep dive or just skim through though? Tell me more man, we can work something out if you are up to it and have some (or more) free time.","html":"Wow man, I was expecting an upgrade but not this fast. Thanks for sharing I took a quick look, RLTP now, the naming keeps keep getting, good start lol.

actually, I remember on the members commented on this thread here exactly that, reasoning for next “segments” if we want to use the papers terminology. I think it’s a valid and harder quest which definitely can make results better. Only quick thoughts that come to mind, for Next Token, you can simply do a code-based verification method, its really boolean, yes or no. Longer segments can be tricky as boolean can’t apply, which leads us to the judge model you referred to. So two new dependencies, first how accurate and policy-compliant the judge model should be at minimum and how do you ensure that?; second, the extra compute required (you can opt to for example request more than 1 score to be generated by judge model for more accuracy to offset the variations of a segment vs a single token) for every single batch of predictions, which by themselves (predictions) will also takes longer to generate by the model under fine-tuning ( 1 token vs segment). Super interesting g man, this will be my next lab for the coming probably-long days/nights! Again thanks for sharing… are you taking a deep dive or just skim through though? Tell me more man, we can work something out if you are up to it and have some (or more) free time.

arXiv lens breakdown of this paper 👉 https://arxivlens.com/PaperView/Details/reinforcement-pre-training-7722-970960b1

\n- \n

- Executive Summary \n

- Detailed Breakdown \n

- Practical Applications \n

Abstract

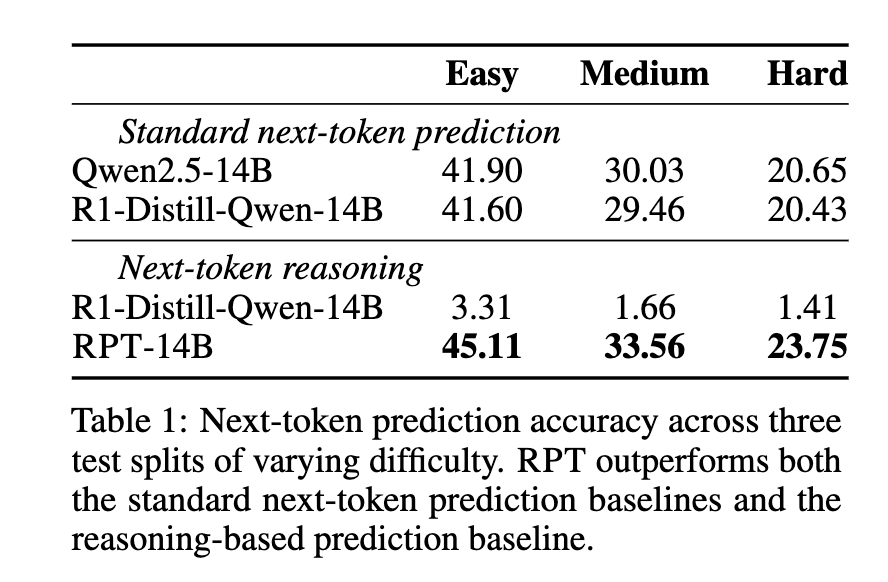

Reinforcement Pre-Training (RPT) improves language model accuracy through reinforcement learning and offers a scalable method for leveraging text data for general-purpose RL.

In this work, we introduce Reinforcement Pre-Training (RPT) as a new scaling paradigm for large language models and reinforcement learning (RL). Specifically, we reframe next-token prediction as a reasoning task trained using RL, where it receives verifiable rewards for correctly predicting the next token for a given context. RPT offers a scalable method to leverage vast amounts of text data for general-purpose RL, rather than relying on domain-specific annotated answers. By incentivizing the capability of next-token reasoning, RPT significantly improves the language modeling accuracy of predicting the next tokens. Moreover, RPT provides a strong pre-trained foundation for further reinforcement fine-tuning. The scaling curves show that increased training compute consistently improves the next-token prediction accuracy. The results position RPT as an effective and promising scaling paradigm to advance language model pre-training.

Community

In this work, we introduce Reinforcement Pre-Training (RPT) as a new scaling paradigm for large language models and reinforcement learning (RL). Specifically, we reframe next-token prediction as a reasoning task trained using RL, where it receives verifiable rewards for correctly predicting the next token for a given context. RPT offers a scalable method to leverage vast amounts of text data for general-purpose RL, rather than relying on domain-specific annotated answers. By incentivizing the capability of next-token reasoning, RPT significantly improves the language modeling accuracy of predicting the next tokens. Moreover, RPT provides a strong pre-trained foundation for further reinforcement fine-tuning. The scaling curves show that increased training compute consistently improves the next-token prediction accuracy. The results position RPT as an effective and promising scaling paradigm to advance language model pre-training.

Thanks for the question. According to Table 2 'Before RL' column, RPT achieves stronger performance on math problems before reinforcement finetuning.

We’ve also achieved positive results on the math datasets you mentioned. We're continuing to scale up and organize our work, and in the coming period, we’ll release evaluation results from larger-scale experiments, which will include the math datasets you're interested in.

I never thought RL could be used for pre-training

excellent paper。but i wonder the cost of training。causal mask in original gpt can increase the efficiency of pre-training. But in this work, I find that it is hard to bring in the causal mask in RPT, so won't it increase the cost of RPT?

I wonder the same. In my interpretation it's pretraining in the sense that it's self supervised training on a curated dataset. But it's not the same as standard pretraining compute-efficiency wise

What would happen if you applied RPT recursively - having the model reason about each token within its own reasoning chain? Would meta-reasoning about the reasoning process itself lead to even better performance, or would the computational overhead outweigh the benefits? :)

In general, although RPT encourages next token prediction reasoning on the curated truncated dataset, it does so by rewarding the model based on the reference/ground_truth which is well-know to the user - it is simply the next token in the truncated text that we can leverage to reward/penalize the model accordingly - hence reinforcing better reasoning. Your suggestion is smart but the first issue before computational overhead is that you do not actually know the next token that the model will output/predict in its internal reasoning chain therefore you cannot reward/penalize the model based on a reward model that does not have a known reference to verify against - as the reference (next token in the models own reasoning) is unknown to you so at least according to the GRPO approach, unless you have a known verifiable reference to reward/penalize against, you cannot achieve RL.

I see the paper says RPT is initialized from a reasoning model and mentions investigating RPT from a standard base LLM under Future Work. I wonder how or whether the training and thought process would be different being initialized from a base LLM instead of a reasoning model

We're working on it. Stay tuned!

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- ProRL: Prolonged Reinforcement Learning Expands Reasoning Boundaries in Large Language Models (2025)

- Behavior Injection: Preparing Language Models for Reinforcement Learning (2025)

- KTAE: A Model-Free Algorithm to Key-Tokens Advantage Estimation in Mathematical Reasoning (2025)

- KDRL: Post-Training Reasoning LLMs via Unified Knowledge Distillation and Reinforcement Learning (2025)

- Do Not Let Low-Probability Tokens Over-Dominate in RL for LLMs (2025)

- Beyond Accuracy: Dissecting Mathematical Reasoning for LLMs Under Reinforcement Learning (2025)

- Incentivizing Strong Reasoning from Weak Supervision (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

If I am not mistaken your approach doesn't allow the massively parallel scaling from standard pre training, so you shouldn't be constrained to just next token prediction.

Have you considered other RL objectives inspired by pre-training besides next token prediction? Like masked token prediction and next sentence prediction from BERT.

Good point! We focus on token-level prediction as the objective is more atomic and clear. Masked token prediction and next sentence prediction are quite interesting and worth exploring :)

Can you provide your fine-tuning code? I am interested in applying the same on the reasoning model MiMo-7B using the same proxy model for entropy but pre-processing the same dataset first then using PPO with binary rewards. Do you think this is achievable on a single H100? using vllm for generation and splitting the vllm/train by a ratio of 30%/70% with shorter sequence length as the MiMo model doesn't tend to be verbose a lot. Also, when using the dataset, are you combining the question with the answer and doing next token prediction on the whole text or just the answer? I have created a training code but really interested in seeing your implementation as this needs memory efficiency and speed.

Then where the initial CoT capability comes from?

Just curious about if you are planning to publish RPT-14B or some equivalent model weights 🙂.

Is there any difference between Standard next-token prediction and Next-token reasoning when reasoning? Does the RPT trained model also need to think about each token when reasoning? Or does it only think about some difficult tokens? But how do we know which tokens are difficult when reasoning? And if we follow this approach, will it greatly increase the reasoning time? I hope you can put a complete reasoning example so that I can understand the doubts here. Thank you very much for your work.

From what I understand, next token reasoning is always using chain of thought / scratchpad / thoughts to output intermediate tokens before the final prediction is outputted in a special format (\boxed{})

Sharing a video & written explanation of this paper - https://aipapersacademy.com/reinforcement-pre-training/

I applied RPT to DeepSeek-R1-0528-Qwen3-8B using a small dataset of around 500 samples only through TRL GRPO implementation and vllm for generation.

Running the MMLU-Pro benchmark on both the base model and the fine-tuned model the overall result increased from 38.5% to 44.5% (Ran it through lm-eval with max generated tokens = 2048 so some samples got truncated therefore probably with more max tokens the values will increase).

Checkout the model at ykarout/RPT-DeepSeek-R1-0528-Qwen3-8B

hey man, I want to ask if you used GRPO for pre-training or for fine-tuning?

That's a great idea, but the required computing power is exaggerated, estimated to be 100 to 1000 times that of traditional pre-training. Moreover, there are too few experiments conducted (possibly due to the excessively high computing requirements), making it somewhat like a semi-finished product that needs further optimization.

Not at all. I’ve ran a replica of the training on the same model but the 8B version with parameters almost exactly as outlined in the paper on a small 500-sample dataset and a generous 8k context generation tokens and it finished in around 3.5 - 4 hours using a single H200 with 140 GB VRAM. Although the dataset size is considered on the small scale, I’ve seen around +10% improvement on MMLU_Pro benchmark overall score.

I am confident this is going to grab even more attention with time as more people try and validate the results.

for this part (filtering the hard tokens) in the paper what was the context length i.e., how many tokens visible to make the next prediction and what tokenizer did you guys used same as the model or just whitespace.

"We use the OmniMATH dataset [GSY+24] for reinforcement pre-training. OmniMATH contains

4,428 competition-level mathematical problems and solutions from official websites such as AoPS

Wiki3 and AoPS forum4. Since many tokens are easily predictable even without reasoning, we

perform token-level data filtering before reinforcement pre-training. Particularly, we use DeepseekR1-Distill-Qwen-1.5B as a small proxy model. For each token, we calculate the proxy model entropy on the top-16 next tokens. By applying an entropy threshold, we filter out low-entropy positions, prioritizing training on challenging tokens that require greater computational effort to predict."

Yes, through RPT-training on a domain corpus, the model is rewarded for correct next-token predictions. This process make the model learn domain specific knowledge and explore the domain-specific reasoning patterns (behind the word correlations).

Hello, it's a great article, and I have a few questions to ask:

- How many times longer does it take to train a piece of data(about 2048 length) using the NTR method compared to NTP? Should NTR be applied only to the last few tokens of a piece of data, or to all the tokens?

- How should we handle the situation if none of the 8 rollout data is the correct answer? Won't the model fail to learn in this case?

A very similar paper got published just yesterday. The idea is hot, results are great 🔥. They did not started from a base model and then did minimal instruction tuning before using RL. Instead of NPT they did next segment reasoning which allows for some variations, but requires a reward model.

Wow man, I was expecting an upgrade but not this fast. Thanks for sharing I took a quick look, RLTP now, the naming keeps keep getting, good start lol.

actually, I remember on the members commented on this thread here exactly that, reasoning for next “segments” if we want to use the papers terminology. I think it’s a valid and harder quest which definitely can make results better. Only quick thoughts that come to mind, for Next Token, you can simply do a code-based verification method, its really boolean, yes or no. Longer segments can be tricky as boolean can’t apply, which leads us to the judge model you referred to. So two new dependencies, first how accurate and policy-compliant the judge model should be at minimum and how do you ensure that?; second, the extra compute required (you can opt to for example request more than 1 score to be generated by judge model for more accuracy to offset the variations of a segment vs a single token) for every single batch of predictions, which by themselves (predictions) will also takes longer to generate by the model under fine-tuning ( 1 token vs segment). Super interesting g man, this will be my next lab for the coming probably-long days/nights! Again thanks for sharing… are you taking a deep dive or just skim through though? Tell me more man, we can work something out if you are up to it and have some (or more) free time.

arXiv lens breakdown of this paper 👉 https://arxivlens.com/PaperView/Details/reinforcement-pre-training-7722-970960b1

- Executive Summary

- Detailed Breakdown

- Practical Applications

Models citing this paper 1

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper